Carbon Capture, Utilization, and Storage (CCUS):…

Evaluating the actuarial capabilities of Large Language Models

The spectacular rise of Large Language Models (LLM) has redefined the landscape of artificial intelligence, paving the way for innovative applications across various sectors. Automating actuarial processes can yield significant advantages in the intricate domain of reinsurance, where risk assessment plays a pivotal role.

In this article, we will explore in detail how LLMs, with their ability to understand and generate natural language, provide groundbreaking solutions to complex reinsurance challenges. In particular, we evaluate a set of state-of-the-art Large Language Models on an actuarial task requiring sophisticated reasoning and calculation: determining total liability from a reinsurance contract.

This article is the second in our series on using large language models (LLMs) for actuaries. Our presentation at the 100% Actuaries 100% Data Science Congress held in Paris on November 21, 2023, organized by the French Institute of Actuaries, allowed us to present the challenges and issues related to using large language models in the actuarial field. Building on the interest generated, this second part delves deeper into the analysis, by evaluating, in detail, the actuarial capabilities of artificial intelligence models.

It is part of a process of reflection and continuous improvement aimed at supporting professionals in the sector in understanding these promising new technologies. In particular, we explore new possibilities offered by Amazon's Bedrock, in the Paris region (France) and the models from Anthropic (Claude v3), Meta (LLama-3) and MistralAI (Mistral-Large).

Total liability in the context of a reinsurance contract refers to the maximum amount of financial responsibility that a reinsurer may have to assume under the terms of the contract. This liability typically represents the aggregate sum of potential claims that the reinsurer may be obligated to pay to the primary insurer, known as the ceding company, in the event of covered losses.

Therefore, calculating total liability on contracts is crucial for reinsurers to accurately assess their financial obligations and manage risk. In particular, this task holds significant importance in the reinsurance industry for the following reasons:

Financial Stability: Reinsurers need to ensure they have adequate reserves to cover potential losses from each contract. By calculating total liability, they can determine the amount of funds they need to set aside to fulfill their obligations, thereby maintaining financial stability.

Risk Management: Understanding the total liability on each contract allows reinsurers to assess their overall risk exposure. This enables them to make informed decisions about risk mitigation strategies, such as purchasing additional reinsurance or diversifying their portfolio.

Compliance: Reinsurers are often subject to regulatory requirements that mandate the calculation of liabilities. Compliance with these regulations is essential for avoiding penalties and maintaining the trust of stakeholders, including policyholders, investors, and regulators.

Scenario Analysis: Reinsurers often conduct scenario analysis to assess the potential impact of catastrophic events on their portfolio. By calculating liabilities per risk category, they can simulate different scenarios and evaluate their financial resilience under various conditions, helping them prepare for, and respond to, potential crises effectively.

A full pipeline automating the calculation of total liability, based on a contract involves two main steps:

The extraction step is a generic NLP task where LLMs have already shown great capability. We have numerous open-source benchmarks involving massive, diversified datasets to measure their performance on this task. We, therefore, focus our attention on the second step to quantify two main aspects in current top-tier Large Language Models: their actuarial knowledge and their domain-specific reasoning/calculation skills.

Most, if not all, state-of-the-art Large Language Models are now accessible through safe channels like Amazon Bedrock Service for Meta, Anthropic or MistralAI models and Azure OpenAI Service for OpenAI models. However, in sensitive industries such as reinsurance, companies also consider the possibility of self-hosting LLMs for maximum security and control over their data.

For small to mid-sized firms with limited resources, this alternative is possible with SLMs (“Small” Language Models of reasonable sizes generally ranging from 1 to 20 billion parameters) which can affordably be deployed and maintained locally or using cloud infrastructure. The performance gap between SLMs and top-tier LLMs is bridged by fine-tuning the SLMs to company-specific tasks and data.

Similar to the 2023 experiment, this benchmark was conducted using a mixed dataset of insurance contracts and reinsurance treaties of various types (quota-share, surplus, excess of loss, etc.). For each contract, a large language model was used to extract the necessary information that constitutes the input of our reasoning/calculation task.

The Large Language Models selected for this evaluation are OpenAI’s GPT-4, Anthropic’s Claude-3 (with its three variations: Haiku, Sonnet, and Opus), MistralAI’s Mistral-Large and Meta’s Llama3 (two variations: 8B-instruct and 70B-instruct). GPT-4 was accessed via OpenAI’s API while all the other models were used through Amazon Bedrock.

Before the launch of Amazon Bedrock in Europe and the availability of the previously mentioned models, we had opted for fine-tuning and self-hosting SLMs as a low-cost solution, with maximum confidentiality guarantees. In particular, we self-hosted a Zephyr-beta model (a variant of the Mistral 7b instruction tuned by Huggingface) as our baseline open-source SLM using Sagemaker inference endpoints. We then used GPT-4 as a teacher to further fine-tune this model, using Sagemaker training jobs, on a dataset of 1000 synthetically generated examples (i.e. extraction-response pairs where the reasoning is done by GPT-4), the limit of 1000 examples being the point at which the learning curve of the model plateaued. Similarly, this fine-tuned version was also hosted on our infrastructure using Sagemaker endpoints.

We therefore include these two models, respectively representing baseline and fine-tuned SLMs, in our benchmark. The aim is to quantify the trade-off for low-resource companies between using highly performant LLMs through safe channels like Bedrock and self-hosting smaller models using local or cloud infrastructure. More specifically, we will mainly be measuring a performance-control trade-off since both alternatives provide guarantees for data confidentiality.

Central to the study's methodology was the innovative "LLM as a judge" approach, widely used in academia and the industry to swiftly conduct large-scale benchmarks with minimal costs. Specifically, this method consists of using a powerful LLM, presented with a predefined set of evaluation criteria and a grading scale, to evaluate model responses, bypassing the need for manual evaluation conducted by expensive subject matter experts.

In our case, the LLM Judge was provided with a detailed description of four main criteria to evaluate separately on a scale of 1 to 5:

To ensure robustness, three leading LLMs—Opus, GPT-4, and Mistral-Large—were enlisted to evaluate the performance of various models, including themselves. Naturally, we first subjected these models to human evaluation (by subject matter experts) on select data points. Once these models were validated as competent judges by human experts, we could then scale up the benchmark by using them as proxies.

The global takeaway from this initial step of human reviews was that despite occasional inaccuracies in a few instances, all three models displayed actuarial knowledge, as well as reasoning skills, rendering them sufficiently competent to be used as evaluators for our benchmark.

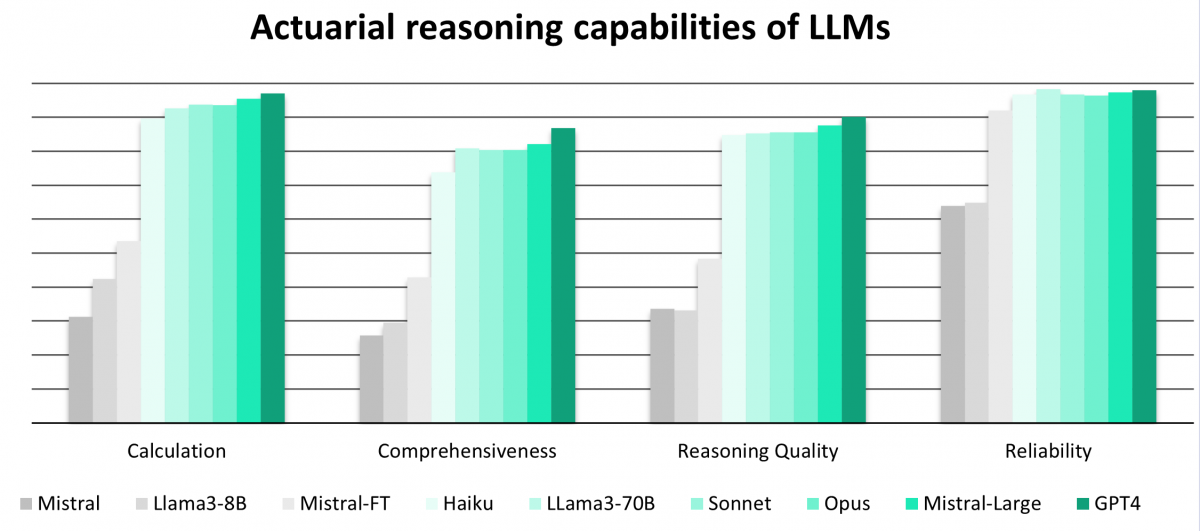

The results, summarized

Interestingly, we see that the provider LLMs used through Bedrock are all highly performant in this task and are on par with GPT-4. Indeed, it is closely followed by Mistral-Large (with a mere 1.2% drop in performance), the Claude-3 models (2% drop for Opus and Sonnet and 3.5% for Haiku) and Llama3-70B (2% drop). The SLM models perform reasonably well, especially when finetuned (15% performance gain vs base model) but are still far from the level of top-tier LLMs in reasoning and calculation skills (20% drop on average).

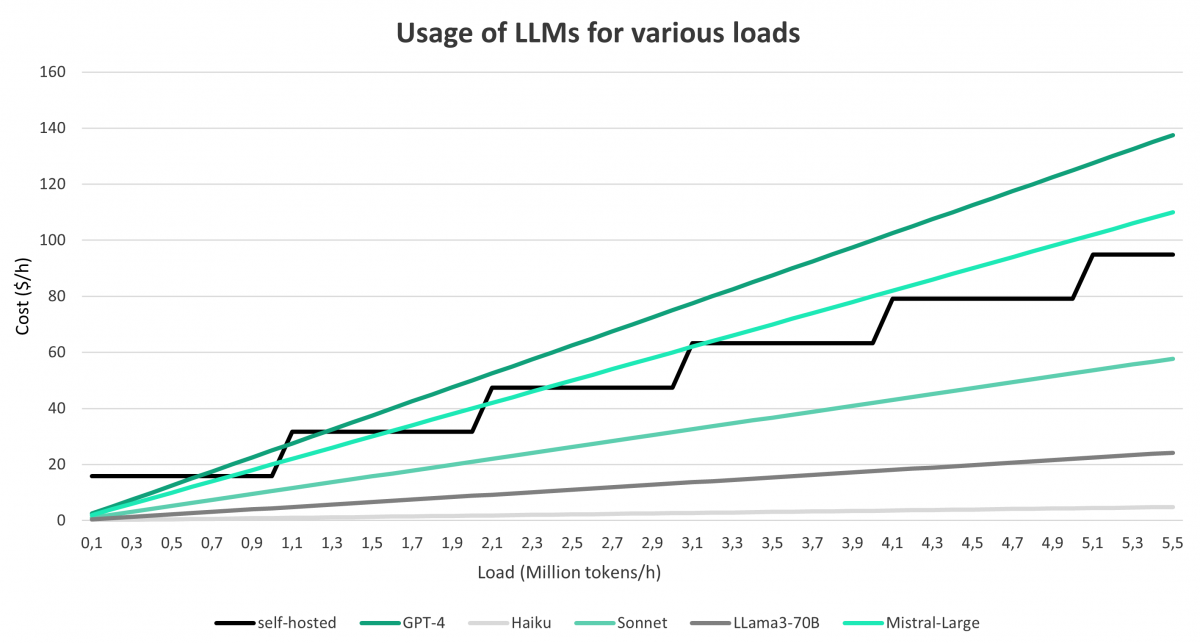

We also compared the usage costs of the different models, as well as the two main approaches (fine-tuning and self-hosting SLMs vs using powerful LLMs through Bedrock). For the provider LLMs, we used the Amazon Bedrock pricing catalog as a reference. As for the SLMs, we calculated the costs assuming the use of Sagemaker endpoints with g5.12xlarge instances. Our actuarial reasoning use-case involves requests of 1,000 input tokens and 500 output tokens on average. Furthermore, based on this benchmark, we estimate that a single g5.12xlarge instance would handle around 8 such concurrent requests with acceptable latency.

A summary of cost analysis

The consecutive jumps in hourly costs for a self-hosted model occur as the load increases. We must add new instances on which we are charged based on the duration of use, as opposed to the LLMs provided through Bedrock, for which the cost is directly determined by the number of input and output tokens. The chart clearly shows that the use of provider LLMs through Amazon Bedrock is much less costly, especially for lower loads. Moreover, we assumed ideal conditions for self-hosting, i.e. continuous and homogeneous usage of the models along with a scale-to-zero policy while ignoring cold starts, which means that the costs are expected to be higher.

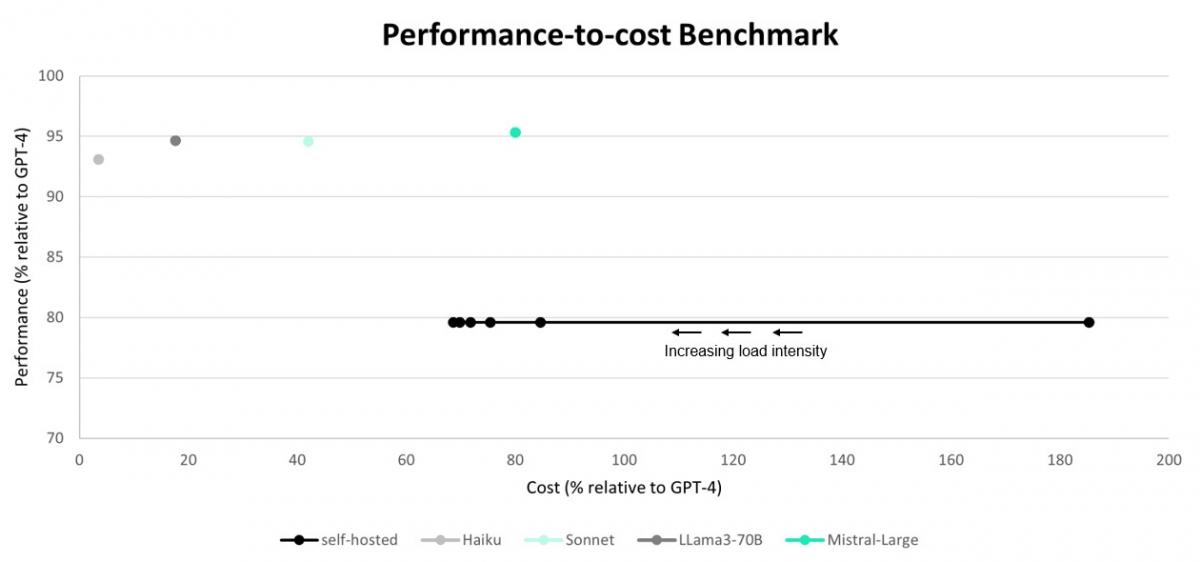

Performance and cost analysis benchmarks

As we can see, the provider LLMs are very close in performance for our use case but differ greatly in terms of costs. The self-hosted, fine-tuned model offers reasonable performance, but the cost of deployment is only competitive under an intense (and continuous) load.

In this study, we presented a comprehensive analysis of the performance of different LLMs on a highly specialized task requiring domain-specific knowledge and sharp reasoning skills. Three key findings can be derived from our benchmark:

Large language models exhibit profound knowledge and actuarial reasoning skills, offering transformative potential for automation and hybridization across reinsurance operations.

The rise of competitive alternatives in the LLM industry, facilitated by platforms like Amazon Bedrock, challenges GPT-4's supremacy, fostering innovation, diversification, and partnership opportunities with better guarantees of safety and control.

Direct utilization of powerful base models, complemented by adept prompt engineering, consistently outperforms fine-tuning smaller models, enhancing efficiency and reducing costs for organizations.